With an increase in AI-generated (“fake”) content that is indistinguishable from human-generated (“real”) content, the Internet is rapidly becoming less trustworthy. Today, we are at a pivotal point in terms of output quality for gen-AI models, which creates a real sense of urgency.

The problem of content authenticity is part of the larger question of trust infrastructure and the challenge of verifiability in our increasingly digital lives. Several problems within this category touch the intersection of AI, cryptography, and blockchains - a theme we plan to explore more in future posts.

In part 1, we focus on our ability to determine the authenticity of content in a post-AI world (the authenticity challenge), and the role that cryptography and blockchains play in solving this problem.

Thanks to Alex, Lisa, Olli, and Michael for your feedback!

Generative AI and The Authenticity Challenge

Generative AI (Gen-AI) is a subcategory of artificial intelligence that uses generative models to produce new and original content based on data it has been trained on. With more capable models, it has become easier than ever to create different kinds of content (text, image, video, audio, etc). Meanwhile, the significant improvement in output quality has made it more difficult to spot AI-generated content.

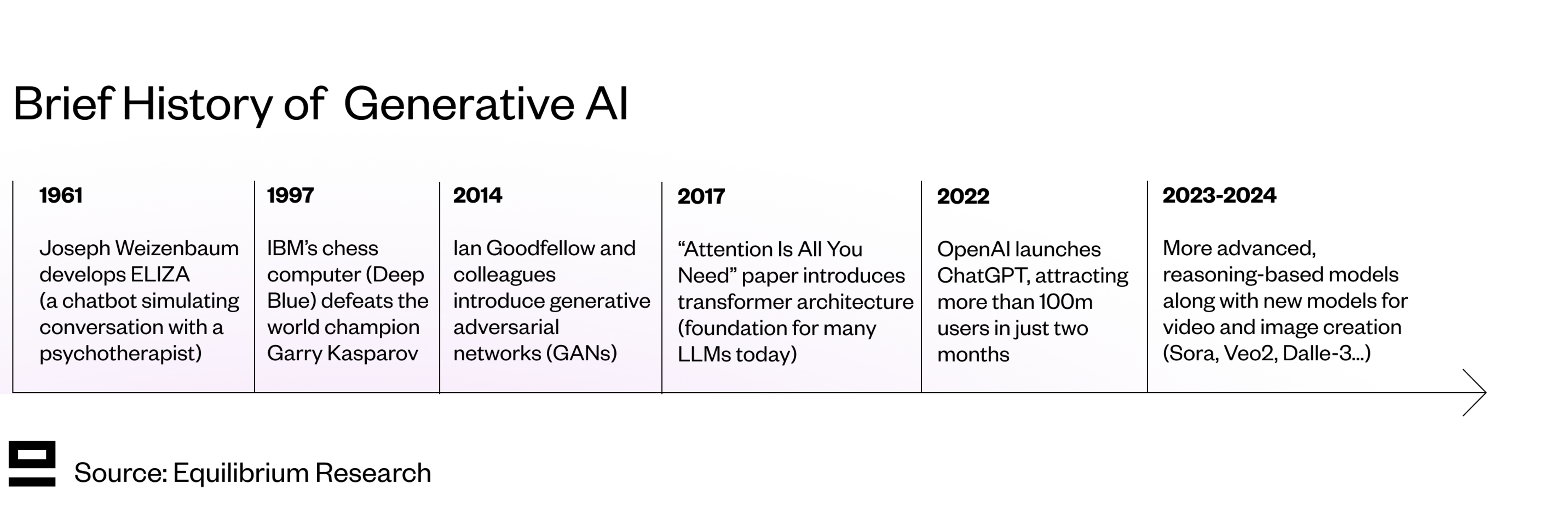

We’ve come a long way since the early 1960s and Joseph Weizenbaum’s ELIZA chatbot - one of the first working examples of generative AI

The Authenticity Challenge refers to the increasing problem of verifying content authenticity in the age of gen-AI. It’s driven by two main factors:

Gen-AI models are reaching the point where our intuition is no longer sufficient to differentiate between human- and AI-generated content (exhibit 1, 2, 3). This problem will only worsen as the models improve.

The marginal cost of producing content is approaching zero, making it easier to flood the Internet with AI-generated content and spam.

Combined, these two challenges pose a real threat to the Internet as we know it today.

Key Risks Of Generative AI

Generative AI is a wonderful tool that many people already leverage in their daily lives to help with brainstorming, research, technical debugging, creativity, and more. In the future, it’s likely that there will be lots of entirely AI-generated content (books, films, music, podcasts, etc).

While many people today still care whether the content they interact with was created by a human or machine (for sentimental reasons), this assumption will probably weaken over time and AI creators may become equal (or even superior) to human creators. Given that we already have virtual influencers, it’s not a stretch to imagine virtual artists such as “AI musicians” with a full backstory and “real” characters who perform their AI-generated music in virtual arenas (or physical arenas with virtual holograms).

The key problem, as Andrew Lu elegantly summarised in the blog post “Sign Everything”, has to do with our reduced ability to trust what we see on the Internet:

Authenticity is becoming increasingly scarce in the digital world. A piece of data is authentic if you know where or who it came from. The Internet gave us universal information distribution, and AI has given us unbounded content creation, but the tools to ensure that we can trust the data we receive have not caught up

The erosion of trust on the Internet has been ongoing for a long time, but the recent evolution of Gen-AI has accelerated it. The risks that this imposes on society can be split into three main categories:

Crime and Fraud: Generative AI models can be leveraged to create deepfakes, which make it much easier to impersonate people through voice, video, images, and more. Sumsub estimates that in 2024, deepfakes already accounted for 7% of all fraud. Generative AI can be leveraged for many different kinds of crimes, including:

Document fraud and bypassing biometric security verifications

Tricking people into transferring personal or corporate funds

Interfering with criminal investigations or court proceedings.

Harassment and damage to someone’s reputation, which can have long-standing consequences even if later proven false (we humans can’t fully remove impressions from our subconscious).

Political Influence: There are well-grounded fears about the impact of deepfakes (and gen-AI more broadly) on politics and the democratic system, whether used by politicians to further their personal causes or against them in misinformation and smear campaigns. While the impact of deepfakes in misinformation campaigns has been smaller than anticipated so far (less than 1% of all fact-checked misinformation in 2024 according to Meta), individual incidents show a glimpse of a potential future if nothing changes.

Losing The Human Element: There are some use cases where maintaining the human element is crucial, one concrete example being online dating: Without some proof of humanity, it’s hard to know today whether i) the person actually exists and ii) even if the pictures are real, don’t know if their chat responses are genuine or AI-generated. This makes it difficult to gauge their personality. Going on a physical date is one way to verify the authenticity of that person, but it requires effort and is not feasible to do on a large scale. While online dating is likely to change significantly, this challenge persists at least in the short term.

How Is Authenticity Verified Today And Why Aren’t These Methods Sufficient?

Fake content, misinformation, and spam have existed much longer than AI-generated content. The big change is that as the marginal cost of producing content approaches zero, the potential negative effects are becoming orders of magnitude greater.

If i) AI-generated content becomes more indistinguishable from human-generated content and ii) the internet is flooded with it, then none of our existing methods will be sufficient.

Why is that? Let’s look at each method more closely:

Human judgment: Trusting our intuition, perception of reality, and previous knowledge to detect misinformation and determine whether something is real or not. While we can train ourselves to spot small disturbances, this is getting increasingly difficult as models evolve. In addition, this works for content that’s meant to be human-like (such as photo-realistic images) but not, for example, animations or art that aren’t meant to be physically correct.

Trusting the publisher: In many cases, we trust the publishing authority, even though the level of trust varies depending on the authority (e.g. reputable news site vs social media platform). However, outsourcing the problem is not sustainable if these authorities don’t have sufficient tools to verify authenticity. In addition, the trend of declining trust in authorities and institutions in the Western world (both the US and Europe) makes this approach less reliable.

Trust in publishers and traditional media keeps declining (source)

Trusting the fact-checker: Content moderation and fact-checking is typically done by platforms (such as social media companies) to ensure adherence with their content policy, or outsourced to third parties (NGOs/governments). In most cases, the process is a combination of automated systems and manual input from humans (e.g. step in if the system flags something). Besides the challenge of keeping it objective and avoiding political bias, this approach also becomes less reliable as the output quality of models improves.

Community notes: Integrated feature that enables users to provide corrections to posts, while community upvotes are used to determine the most useful ones. Community notes have been used on X/Twitter since 2021 and Meta recently announced that they would start replacing third-party fact-checking programs with community notes. While community-driven analysis benefits from having more eyes on the problem, it faces similar issues as the first option (human judgment). Without the ability for individuals to independently verify whether the content was AI-generated or not, it’s not particularly effective. In addition, there is limited transparency in how specific community notes are chosen to be displayed under posts.

Visual cues: Features like the blue checkmark on X were originally aimed at signaling an account had been authenticated, yet today is merely a sign that the account has paid for a premium subscription. Consequently, there is no shortage of fake accounts with the blue checkmark. Even as the underlying authentication mechanism changes, we may not update our “trust habits” at a similar pace. In addition, the visual cues often can’t be trusted by themselves. Instead, users need to go through the profile, check who else follows the account, analyze the posts made from the account, and more to get a clearer picture and build trust in the person/account.

Besides the lack of scalability (all five require human input and analysis), another key challenge is that none of the methods can give results with stronger proof of authenticity (high degree of certainty). This is particularly important for high-impact scenarios.

For example, how can we verify whether an image represents a real situation in a warzone instead of it being fabricated using generative AI? Or how do we minimize the risk of different types of crime and fraud?

For that, new methods are required.

Content Verification In The Age Of AI

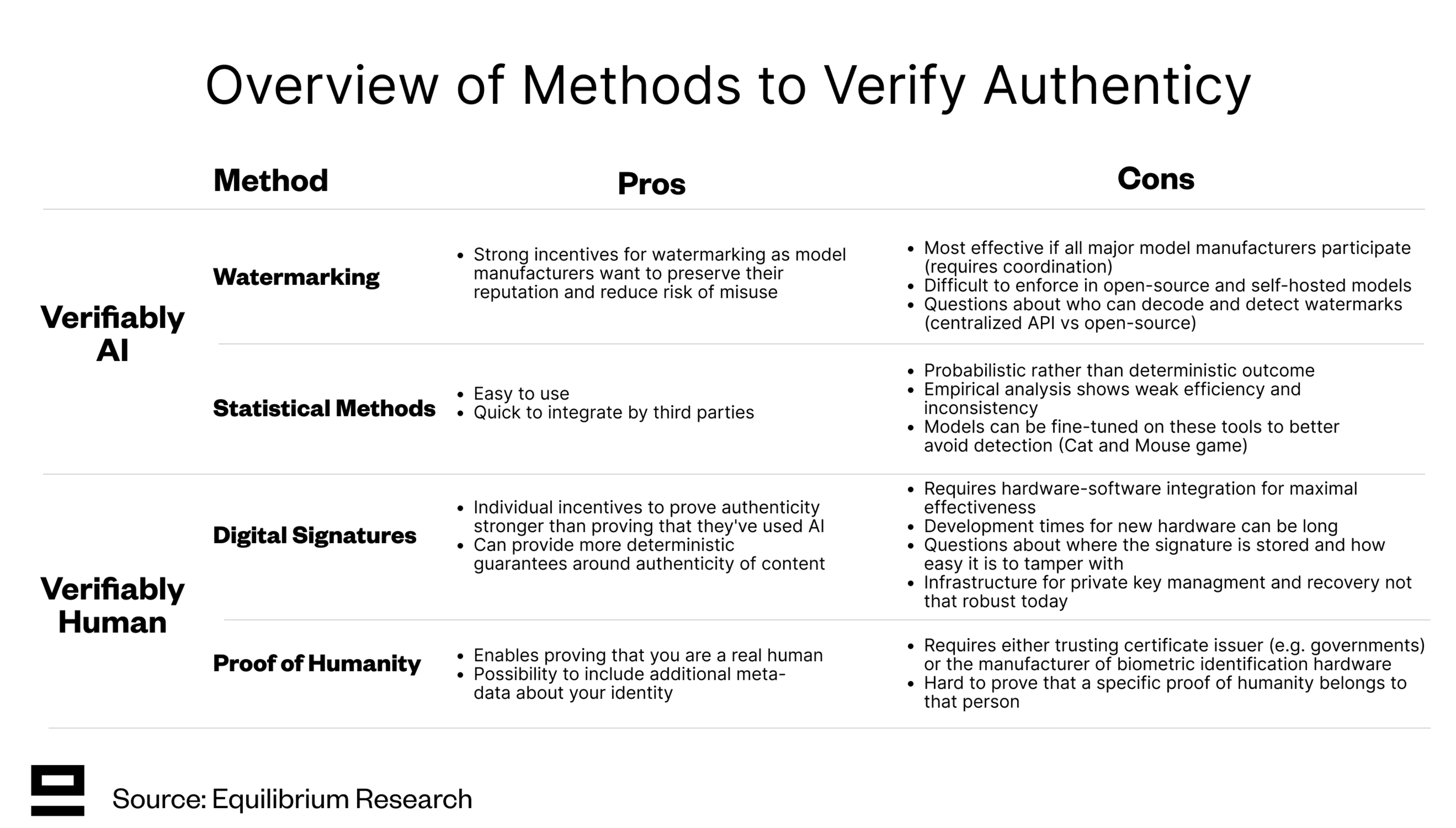

Broadly speaking, there are two main paths forward in this post-AI Internet - verifiably AI and verifiably human:

1. Verifiably AI = Prove Content Is AI-generated

Placing the burden of proof on the model developers and assuming that content is not AI-generated by default (“innocent until proven guilty”):

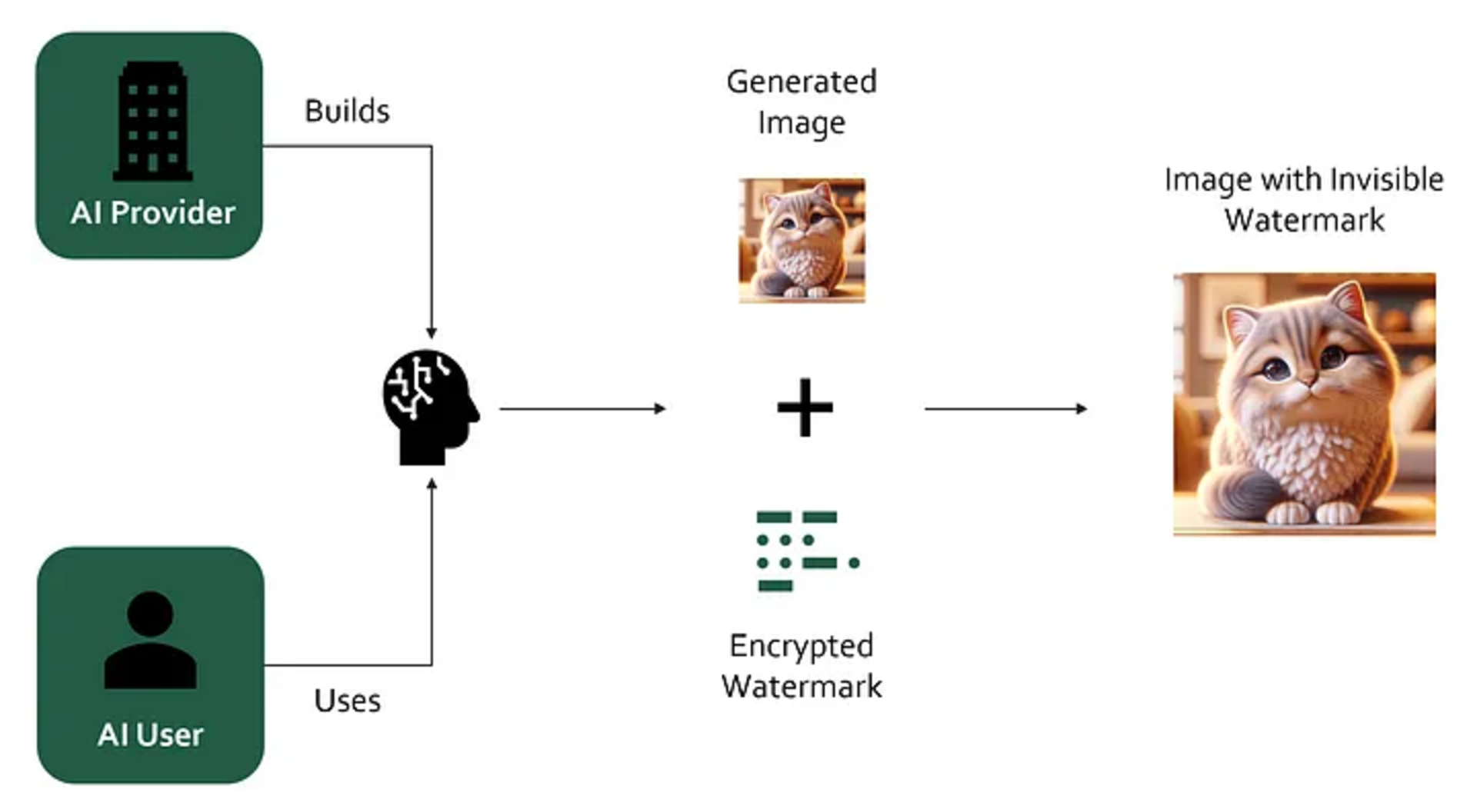

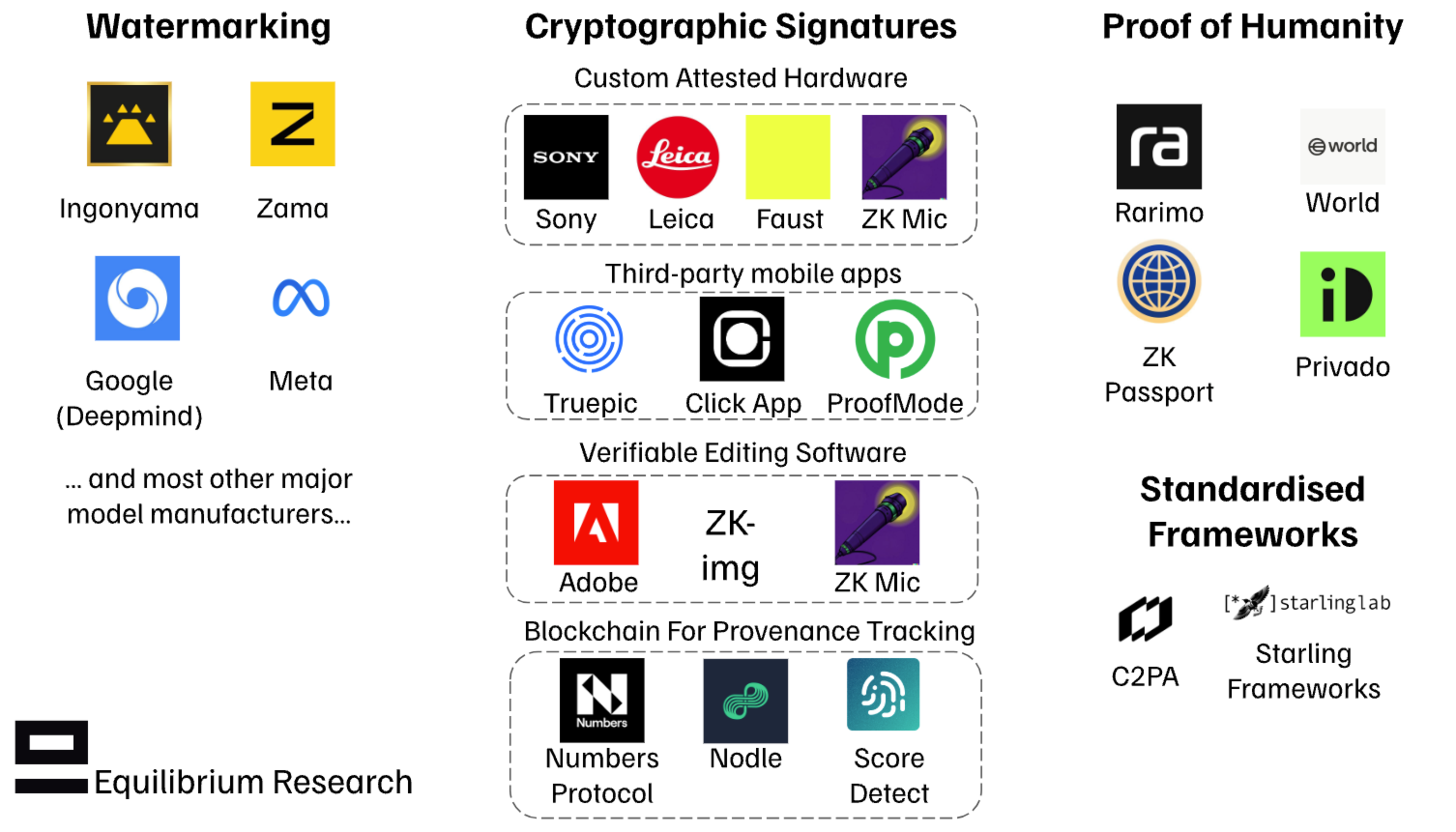

Watermarking: Subtle watermarks (digital signatures) are integrated into AI-generated content to help identify its origin and prevent unauthorized use. Watermarking can be directly integrated into the image generation process itself or done as a separate service on top of image generation.

To be effective, watermarks need to be adopted by all large industry players (potentially through global regulation), resistant to manipulation (resizing, adding a filter, screenshotting the original image, etc.), and difficult (or impossible) to remove from the picture.

While there are steps in this direction by Google / DeepMind, Meta, Microsoft, Amazon, etc, there is a constant stream of research into how adverse actors could get around these watermarks (e.g. by adding random noise). In addition, watermarking doesn’t work particularly well with open-source and self-hosted models, since removing the watermark can be as simple as editing the source code. Finally, there’s the question of who can decode and verify the integrity of the watermark (more on this later). Closed-source APIs mean that the model developer can act as a gatekeeper for integration and verification, meanwhile open-sourced APIs face security challenges (easier to circumvent).

Invisible watermarks are integrated into the content (source)

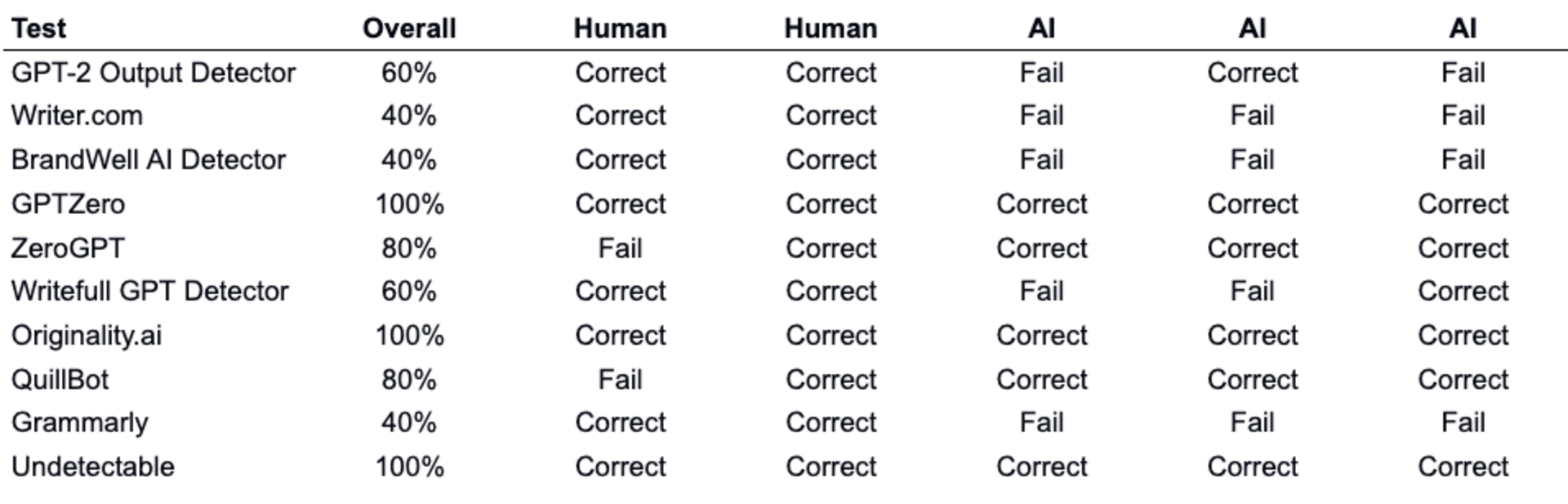

Statistical method (AI Checkers): Today, there are several tools that aim to identify AI-generated content through probabilistic approaches. Most of these are AI models themselves that are trained on both human- and AI-generated content to recognize common patterns. While easy to use, empirical testing of these tools shows that they are not very accurate and give varying results (e.g. biased against non-native English speakers). In addition, they can only give probabilistic outcomes (not deterministic), which is acceptable for certain use cases, while others will require more confidence. Finally, it’s a bit of a cat-and-mouse game since the risk of being caught by checkers can be reduced by smarter prompting or by finetuning the gen-AI models themselves on these checkers.

Statistical models prove to be unreliable and inconsistent in empirical testing (source)

2. Verifiably Human = Prove Content Is NOT AI-generated

Placing the burden of proof on users and assuming all content is AI-generated unless it’s signed (“guilty until proven innocent”):

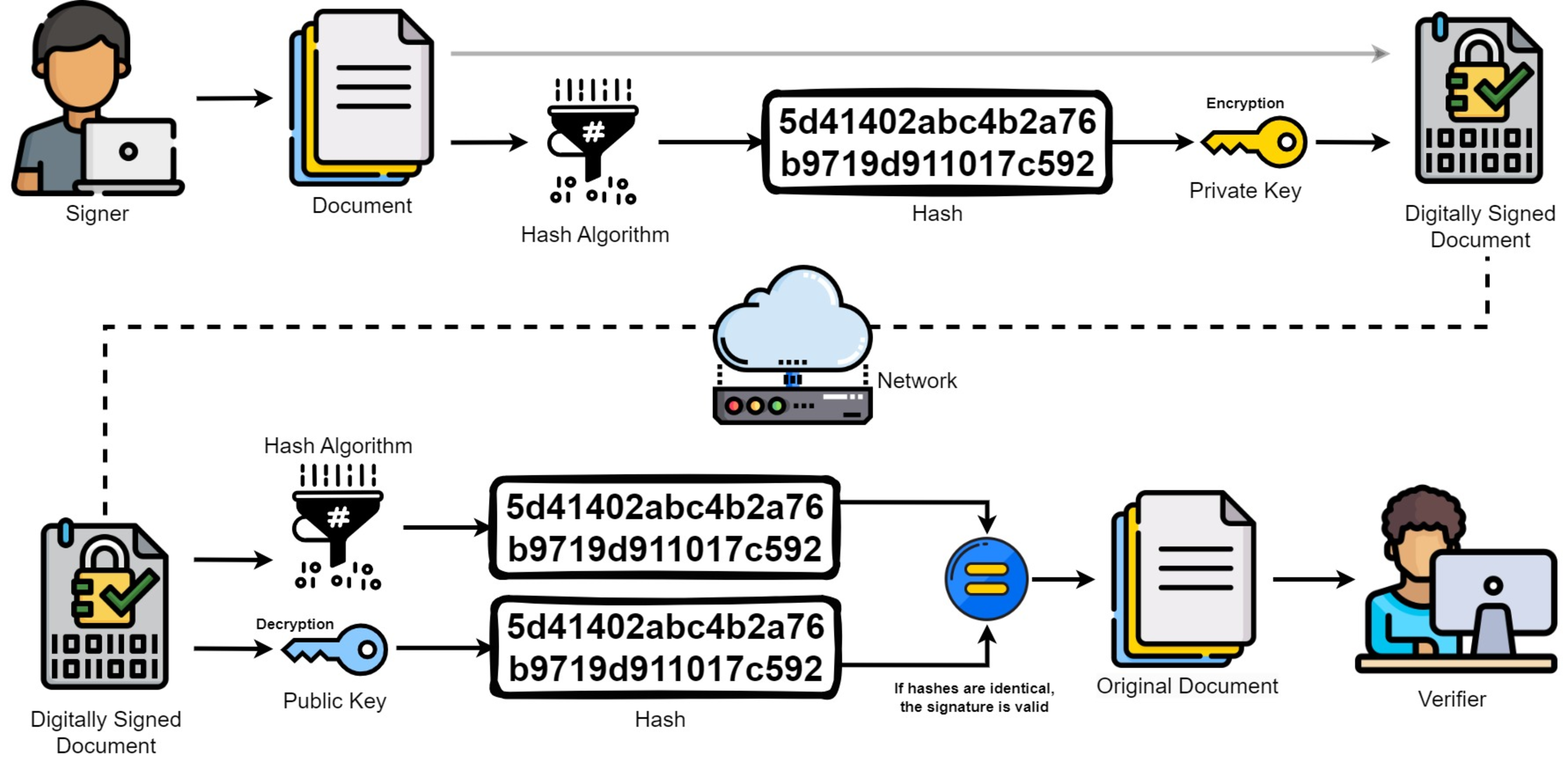

Digital Signatures: By leveraging public key infrastructure (PKI), we could digitally sign all human-created content with our private key (cryptographic signature). The signature can easily be verified using the corresponding public key, allowing other users to verify the authenticity and ensuring the content has not been tampered with since signing. It’s also possible to include additional metadata, such as by whom, when, where, and how the content was created. While digital signatures are not a new concept, they could be utilized much more widely as Andrew Lu notes in his post Sign Everything:

So far, digital signatures have found widespread adoption in TLS certificates, email, peer-to-peer payments, and more. But this is just the tip of the iceberg - every single piece of data that originates from a specific person or organization could be digitally signed

For minimal trust guarantees, digital signatures require coordination between both hardware and software. Getting a signature from the hardware (e.g. attested camera) is not sufficient given that most content is edited before publishing - we also need to be able to prove the steps in editing (and if some AI tool was used, documenting what and how). Similarly, a person uploading content and signing it without the ability to track the origin of the content requires trusting that person or organization, which is not always feasible.

Digital signatures leverage public key infrastructure (Source)

Proof of Humanity: For certain use cases, such as online dating or podcasting, it might be necessary to prove that you or the other party is a real human. This could be done with tools such as ZK-passport (Rarimo, ZK Passport) or biometric identification (World). In other cases, more granular metadata around your identity is required, for example, to prove that you are employed at a specific organization or have a specific certification (Privado ID).

ZK identity solutions alone aren’t sufficient to prove that a specific proof of humanity belongs to that person and could be faked. Combining ZK passports with biometric identification could help reduce this risk, but it likely requires specialized hardware (adding new trust vectors).

Show me the incentives and I will show you the outcome

None of these solutions will be adopted for mere goodwill, but rather because they solve real problems.

Model manufacturers are incentivized to include watermarking to avoid reputational damage (e.g., being blamed for facilitating crime or misinformation). Meanwhile, individuals and corporations alike have both personal and commercial incentives to prove the authenticity of the content they’ve created. Regulation also plays a role, but it tends to lag innovation and be slow to enforce.

In practice, it’s likely that both the verifiably AI and verifiably human approaches will be used. The graph below summarises their unique benefits and drawbacks:

How Do Cryptography and Blockchains Help Solve The Authenticity Challenge?

While blockchains need cryptography, the relationship doesn’t hold the other way around (i.e. cryptography doesn’t need blockchains).

Hence, it’s interesting to start by asking what cryptography can do to solve the authenticity challenge and then look at what additional guarantees blockchains can provide.

Cryptography

We already interact with cryptography (unknowingly) across many facets of life, with everything from browsing the web to automated border control systems that scan our passports. A few ways how it ties into the authenticity challenge include:

Provable and Privacy-Preserving Watermarking: A key challenge of watermarking is that it requires keeping the extractor model private, since exposing it would make the scheme susceptible to attacks. While the creator of the watermarking scheme (e.g., Meta) can run the extractor model and verify its authenticity, they can’t prove that to third-party users without exposing the model. In other words, it requires inherent trust in the model's creator.

Ingonyama’s zkDL++ leverages ZKPs to prove watermarking in a more trustless and privacy-preserving manner. Rather than blindly trusting the model creator with the extraction of the watermark, they can attach proof of watermark extraction (ZKP) without revealing any sensitive model details. Users can then verify this proof independently.

Zama is attempting to tackle a different problem (from the one that zkDL++ solves) by focusing on creating an independent, third-party solution that can create the watermark without exposing the original content to the watermarking service (leveraging FHE). One challenge here is performance overhead, as exemplified by a proof-of-concept based on Invismark: Inference with this method took ~20mins (M1 MAX, 64GB RAM), but would be faster on server-level hardware.

Proteus leverages perceptual hashing (DinoHash), digital signatures, and MPC/FHE in an attempt to create more robust watermarks as well as preserve privacy in mapping image provenance (keeping both user queries and registry data private). However, they will likely face similar performance bottlenecks as the Zama use case above (at least in short- to mid-term).

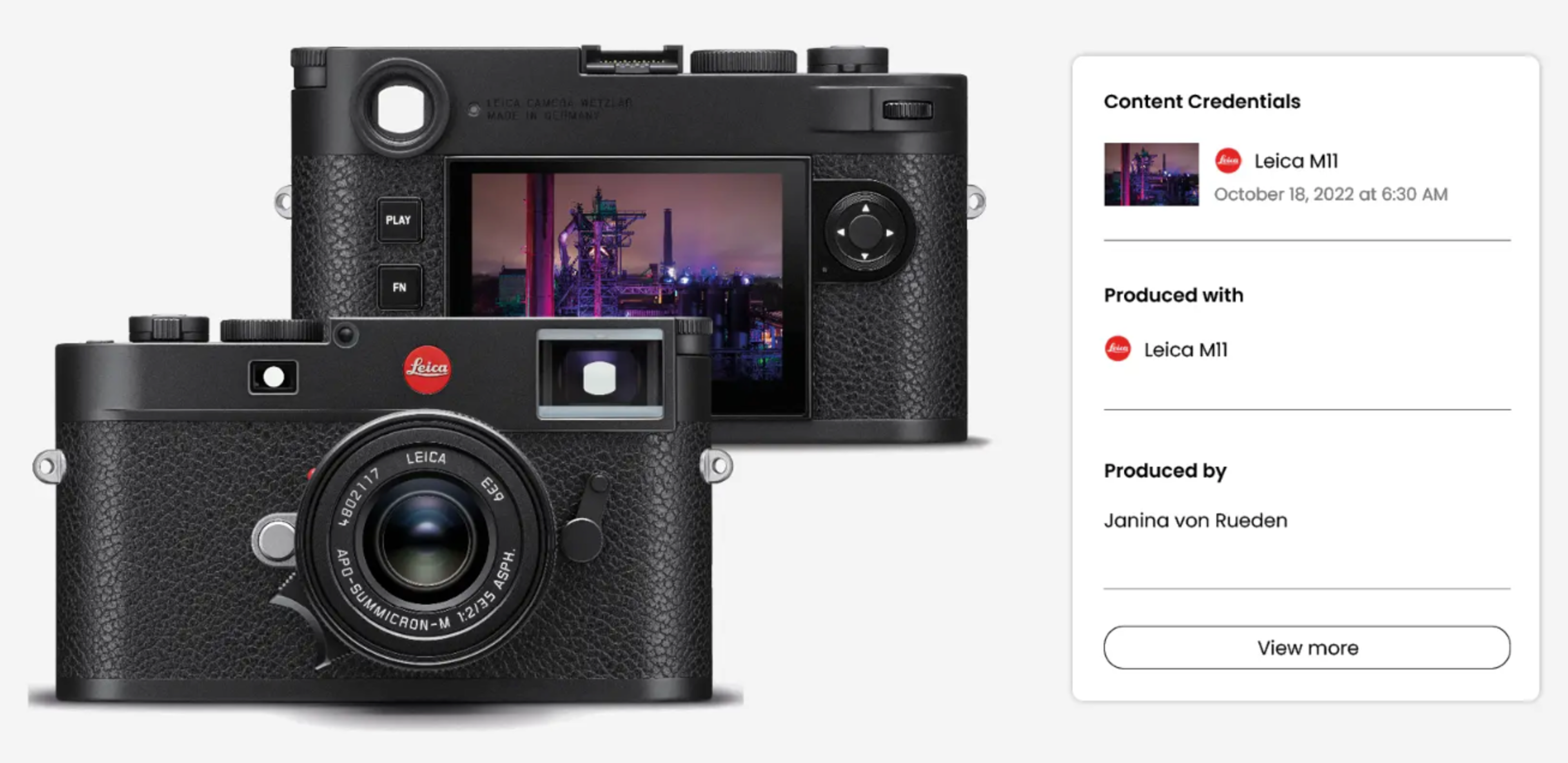

Attested Hardware: Signing every image, audio, or video taken/recorded with the device along with some additional metadata such as timestamp, location, etc. Most approaches leverage the secure enclave or TEEs in the device itself for signatures, but there are also some attempts to combine this with client-side ZKPs for easier and more trust-minimized verification.

Cameras with hardware attestation are special cameras that sign each picture with a private key and include additional metadata (location, timestamp, and device user). Some existing models from incumbents such as Leica and Sony already have this feature, but in an ideal world, every device would have it. There are also new entrants, such as Roc Camera by Faust, who are building a solution that combines client-side ZKP and TEEs for attesting sensor data.

Third-party mobile apps, such as Click-App (by Nodle), Truepic, and ProofMode, leverage the secure enclave in your smartphone and/or client-side ZK proving to sign photos and videos taken within the app. The signature can then be stored either on the blockchain or on a private server/cloud. While having signatures directly integrated into the native camera app would be smoother (and better for adoption), third-party apps can serve as an intermediate step.

ZK Microphone is an attested microphone that enables verified audio (e.g. for podcasting or interviews). The product also enables editing the content while retaining the authenticity and privacy of the original content (by leveraging ZKPs). The proof, signature, and edited audio are stored on IPFS, enabling easy verification of the signature and the proof.

Verifiable Editing Software: Almost all content (images, video, audio, etc.) is edited before publishing. Verifiable editing software is focused on extending the useability of attested hardware by enabling editing content, while still maintaining the cryptographic integrity of the signature and the ability to prove that content is authentic (hardware + software integration).

Adobe enables secure metadata to be attached to the content during the editing phase (“content credentials”). This allows for maintaining the cryptographic integrity of the attested hardware during editing and proving what gen-AI tools were used during editing (if any). However, this approach requires trusting the editing software (in this case, Adobe) to sign the steps along the transformation, and those guarantees are not very strong.

zk-IMG: Leveraging ZKPs to enable editing photos while maintaining cryptographic integrity, without having to rely on a single third party. The authenticity is verifiable through a ZKP (more permissionless and transparent), while privacy is preserved by only storing the hash of the original image and intermediate transformations.

Blockchains

Blockchains offer three main benefits related to content verification:

Stronger guarantees: Blockchains are immutable by design and it’s very difficult to tamper with something once it’s recorded on-chain (e.g. a signature or unique hash). While the signature and hash could be stored on centralized servers (such as Leica or Sony), blockchains offer stronger trust guarantees and tamper resistance.

Permissionless Verification: Anyone can verify the signature's authenticity by checking the blockchain or verifying a succinct proof. Integration is also permissionless, meaning that applications (such as social media platforms) can freely integrate the verification logic into their product or front-end without having to ask for permission.

Global and Neutral: Rather than a private database owned by a single company or country (which would give them outsized power), no single entity controls the blockchain (the database where signatures and records are stored). This improves trust and prevents the emergence of gatekeepers (e.g. through closed APIs).

Leveraging blockchain for provenance tracking can help guarantee that a piece of content was actually published by a specific authority rather than someone pretending to be them. Some projects to highlight here include:

Score Detect enables users to upload different types of content to the ScoreDetect platform along with a digital signature. The signature is stored on Skale (public blockchain) for immutability and easy verification. However, if no attested hardware is used, this requires trusting the author or person uploading the content.

Nodle has a group of products aimed at tackling the authenticity challenge from different angles (consumer, enterprise, and different types of content). Signatures are stored on the blockchain (initially built as a Polkadot parachain, but recently transitioned to zkSync). Part of the C2PA standard.

Numbers Protocol aims to ensure the authenticity and traceability of online media through its Capture app and related products. It’s powered by the Numbers blockchain, built as an Avalanche Subnet. While not exclusively focused on AI detection, the system can include metadata about content creation. Numbers protocol also follows the C2PA standard.

What Could The Future Look Like?

It’s likely that both approaches (verifiable AI and human) will be part of the solution to tackle the three key challenges of generative AI. The latter, however, can provide more deterministic guarantees around the authenticity of content with sufficient hardware-software integration.

In an ideal future, we would have:

Every physical device (camera, microphone, smartphone…) is an attested hardware that signs the recorded content with the private key of the owner along with additional metadata.

Editing of content is enabled while still maintaining the cryptographic integrity of the signature from the attested hardware.

ZKPs are leveraged to prove what steps were taken in the editing (and whether AI tools were used) rather than trusting third-party editing software.

Signatures and hashes are stored on permissionless blockchains with sufficient decentralization to increase tamper resistance and enable permissionless verification.

All major model manufacturers enable strong watermarking schemes and leverage ZKPs to prove the integrity of the watermark in a more trustless and privacy-preserving manner.

Only signed content would be trusted as being human-generated. Meanwhile, most AI-generated content (fully or partially) would be sufficiently disclosed through watermarking. All of this should be easily verifiable by end-users and builders should be able to integrate verification streams permissionlessly into their products.

While there would still be risks of corrupted hardware, malicious parties getting around watermarking schemes, etc, these steps would significantly reduce the risk of AI-generated spam taking over the internet and warping our perception of reality. While not perfect, it's significantly better than doing nothing.

Open Challenges To Getting There

While a future with stronger guarantees around content authenticity sounds promising, there are several challenges that stand in the way of getting there. Some open questions include:

Do we need a global standard for private keys linked to the creator's identity? While some regional digital identity initiatives (such as the EU's eID) could be leveraged here, existing zk-based identity solutions might provide a lower-friction approach since they leverage existing identification (passports).

How can we improve the infrastructure for private key management and recovery? This is a major friction point, as losing access to the wallet containing your private key could have serious consequences. While the more decentralized recovery approaches (social recovery, zk-email, or a combination of multiple methods) have stronger guarantees, it's possible that most people will choose a more centralized backup method for better UX.

How do we avoid the "picture of a picture" problem? While attested hardware with additional metadata (location, time, the person behind the content, etc.) can help ensure the authenticity of content, it doesn't rule out the possibility of taking a picture of an (AI-generated) picture, or a recording of a recording. While the creator's personal and/or corporate reputation is already at stake ("social slashing"), it might be worthwhile to explore harder forms of punishment (such as putting up an economic stake or risk losing your publishing license for X amount of months) for certain use cases. However, these are more difficult to enforce.

What role does regulation play? This piece has mostly focused on technology-driven solutions, yet we know that regulation and legislation often play important roles in shaping the future. While the internet is natively global, regulation will likely have regional differences. This imposes a challenge to how authentication methods will evolve and be integrated into different services. The most promising path forward would be a global standard that everyone adopts, similar to the HTTP and email protocols.

Conclusion

Generative AI has accelerated the deterioration of trust on the internet, but cryptography and blockchains can work hand-in-hand to restore it.

In many ways, cryptography does the heavy lifting while blockchains act as the cherry on top of the cake. Although blockchains alone can't guarantee authenticity, they can provide stronger guarantees around tamper resistance along with easier verification.

Verifiably human (more specifically, digital signatures) are our best bet for verifying authenticity with strong guarantees. However, it requires hardware-software integration to maintain the cryptographic integrity from the device where content is created, editing software where it’s manipulated, to where the signature is stored and easily verifiable by anyone. Additional methods for punishment might also be needed to avoid the "picture of a picture" problem.

However, watermarking also plays a part. While it may not stop bad actors, it does benefit good actors.

Hence, when it comes to the question of which approach we should pursue to verify authenticity—provably human or provably AI—the answer is not so much one or the other. Instead, we should pursue both.

Continue reading

May 28, 2025

State of Verifiable Inference & Future Directions

Verifiable inference enables proving the correct model and weights were used, and that inputs/outputs were not tampered with. This post covers different approaches to achieve verifiable inference, teams working on this problem, and future directions.

March 25, 2025

Introducing Our Entrepreneur in Residence (EIR) Program

After 6+ years of building core blockchain infrastructure across most ecosystems and incubating ventures like ZkCloud, we're looking for ambitious pre-founders with whom to collaborate closely.

March 10, 2025

From Speculation to Utility: Next Steps For Onchain Lending Markets

Despite its promises, onchain lending still mostly caters to crypto-natives and provides little utility besides speculation. This post explores a path to gradually move to more productive use cases, low-hanging fruit, and challenges we might face.

February 6, 2025

Vertical Integration for both Ethereum and ETH the Asset

In recent months, lackadaisical price action and usage growing on other L1/L2s has driven a discussion on what Ethereum’s role and the value of ETH, the asset is long-term.

January 29, 2025

Equilibrium: Building and Funding Core Infrastructure For The Decentralized Web

Combining Labs (our R&D studio) and Ventures (our early-stage venture fund) under one unified brand, Equilibrium, enables us to provide more comprehensive support to early-stage builders and double down on our core mission of building the decentralized web

November 28, 2024

20 Predictions For 2025

For the first time, we are publishing our annual predictions for what will happen by the end of next year and where the industry is headed. Joint work between the two arms of Equilibrium - Labs and Ventures.

November 7, 2024

9 + 1 Open Problems In The Privacy Space

In the third (and final) part of our privacy series, we explore nine open engineering problems in the blockchain privacy space in addition to touching on the social/regulatory challenges.

October 15, 2024

Aleo Mainnet Launch: Reflecting On The Journey So Far, Our Contributions And Path Ahead

Equilibrium started working with Aleo back in 2020 when ZKPs were still mostly a theoretical concept and programmable privacy in blockchains was in its infancy. Following Aleo's mainnet launch, we reflect on our journey and highlight key contributions.

August 12, 2024

Do All Roads Lead To MPC? Exploring The End-Game For Privacy Infrastructure

This post argues that the end-game for privacy infra falls back to the trust assumptions of MPC, if we want to avoid single points of failure. We explore the maturity of MPC & its trust assumptions, highlight alternative approaches, and compare tradeoffs.

June 12, 2024

What Do We Actually Mean When We Talk About Privacy In Blockchain Networks (And Why Is It Hard To Achieve)?

An attempt to define what we mean by privacy, exploring how and why privacy in blockchain networks differs from web2, and why it's more difficult to achieve. We also provide a framework to evaluate different approaches for achieveing privacy in blockchain.

April 9, 2024

Will ZK Eat The Modular Stack?

Modularity enables faster experimentation along the tradeoff-frontier, wheras ZK provides stronger guarantees. While both of these are interesting to study on their own, this post explores the cross-over between the two.

October 5, 2023

Overview of Privacy Blockchains & Deep Dive Of Aleo

Programmable privacy in blockchains is an emergent theme. This post covers what privacy in blockchains entail, why most blockchains today are still transparent and more. We also provide a deepdive into Aleo - one of the pioneers of programmable privacy!

March 12, 2023

2022 Year In Review

If you’re reading this, you already know that 2022 was a tumultuous year for the blockchain industry, and we see little value in rehashing it. But you probably also agree with us that despite many challenges, there’s been a tremendous amount of progress.

May 31, 2022

Testing the Zcash Network

In early March of 2021, a small team from Equilibrium Labs applied for a grant to build a network test suite for Zcash nodes we named Ziggurat.

June 30, 2021

Connecting Rust and IPFS

A Rust implementation of the InterPlanetary FileSystem for high performance or resource constrained environments. Includes a blockstore, a libp2p integration which includes DHT contentdiscovery and pubsub support, and HTTP API bindings.

June 13, 2021

Rebranding Equilibrium

A look back at how we put together the Equilibrium 2.0 brand over four months in 2021 and found ourselves in brutalist digital zen gardens.

January 20, 2021

2021 Year In Review

It's been quite a year in the blockchain sphere. It's also been quite a year for Equilibrium and I thought I'd recap everything that has happened in the company with a "Year In Review" post.